*This text was initially printed on The SAS weblog of Dwijendra Dwivedi

DataOps will increase the productiveness of AI practitioners by automating knowledge evaluation pipelines and accelerating the method of transferring from concepts to improvements. DataOps finest practices make uncooked knowledge polished and helpful for constructing AI fashions.

Fashions should work on the information that’s enter in addition to the scoring knowledge when the mannequin is operational. Subsequently, combining ModelOps and DataOps can considerably pace up the mannequin growth and implementation course of. This, in flip, supplies a better return on funding in initiatives based mostly on synthetic intelligence or deep studying function fashions.

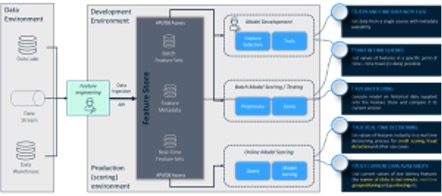

This text focuses on deliver DataOps and ModelOps collectively. One method to mix the 2 is thru a centralized machine studying function library or “function retailer”. This will increase effectivity in how capabilities are reused. It additionally improves high quality as a result of standardizing options creates a single definition accepted throughout the group. Bringing this library into the mannequin growth course of and into an end-to-end analytical lifecycle supplies a number of advantages in rising time to market and total effectivity (see Determine 1).

Function Repository Course of Movement

Determine 2 reveals a basic knowledge interplay circulate and the way customers work together with a function retailer.

Usually, an information scientist who needed to construct a brand new mannequin would begin by searching the present options within the function retailer. If the specified options are usually not out there, the information scientist or knowledge engineer ought to create a brand new set of options and supply applicable knowledge so as to add them to the function repository. This knowledge can then be queried, joined, and manipulated with different function units to construct a coaching set (generally known as an analytic base desk) for mannequin growth. If the mannequin is utilized in manufacturing, then each the information calculations and the assimilation course of must be operationalized.

If the options are already out there within the function retailer, the information scientist can retrieve them for mannequin growth. It might, nevertheless, have to get new knowledge for the chosen options (for instance, if the function retailer accommodates knowledge for 2022, however the mannequin must be educated on 2021 knowledge). The flexibility to go looking via metadata and browse present options is vital to the event course of because it shortens the event cycle and improves total effectivity. Information preparation is essentially the most time-consuming a part of the analytics lifecycle, and reuse is essential.

The elements of a function retailer

A typical function retailer consists of metadata, the underlying storage that accommodates computed and ingested knowledge, and an interface that enables customers to retrieve the information. Metadata could be structured and named in numerous methods, and there are additionally variations within the interface capabilities and particular applied sciences that implement the storage. These have an effect on the efficiency of the function retailer and vary from conventional databases via distributed file methods to complicated multi-method options that may be on-premises or in public cloud providers. There may be additionally a big distinction between offline and on-line storage.

Offline storage is used for low-frequency, high-latency knowledge that’s calculated at most as soon as a day and often month-to-month. On-line storage is used for high-frequency, low-latency knowledge which will require instantaneous updates. These could embrace options such because the variety of clicks on the positioning within the final quarter-hour (offering details about the shopper’s stage of curiosity), declined bank card funds within the final hour, or present geographic location for suggestion functions. On-line capabilities are important and supply all kinds of functions in evaluation. Typical use circumstances and important elements of a function retailer are proven in Determine 3.

-

Determine 3 Logical function retailer structure and use circumstances

Having options and variables saved centrally permits you to monitor how and what knowledge is calculated. Some function shops present statistics that measure knowledge high quality, such because the quantity or proportion of lacking values, and extra complicated approaches, together with outlier detection and time-varying variation. Customized codes are used to judge knowledge and apply monitoring based mostly on enterprise guidelines. Monitoring is necessary for knowledge high quality, particularly when mixed with automated, rules-based alerts.

The important thing advantages and challenges of a function retailer

Implementing ModelOps in a company supplies an enormous effectivity achieve. Scale back time to marketplace for analytical fashions and enhance their effectiveness via automated deployment and fixed monitoring. Function shops add much more, bettering and automating knowledge preparation (by way of DataOps) and shortening mannequin implementation time. Key advantages embrace:

- Scale back time to market via quicker mannequin growth and operationalization with knowledge implementation in thoughts.

- Structured processes with a single level of entry to seek for knowledge and a clear growth course of with express duties.

- Simple integration as knowledge scientists can use present capabilities.

- Environment friendly collaboration, as options created by one developer could be immediately reused by others.

Specialty shops additionally deliver some challenges. Most necessary is operationalize on-line or real-time options for fashions in manufacturing. This requires a streaming engine, which can differ from the information processing engine used to ingest knowledge into the function retailer. This may increasingly require recoding, which lengthens the implementation of the mannequin and is error-prone.

One other downside happens when capabilities can be found however there isn’t any supporting knowledge in storage. This requires customers to return to how the information was calculated after which ingest and repeat this step for the required interval. This often requires an information engineer and elongates the mannequin implementation process.

The underside line

Bringing collectively DataOps and ModelOps utilizing a function retailer permits organizations to simply undertake new knowledge sources, create new options, and operationalize them in manufacturing. This technological shift is resulting in an organizational shift in automating the analytics lifecycle. DataOps motion units are the important thing to every digital transformation and a method to meet the calls for of a quickly altering world.

For additional studying, take a look at ModelOps with SAS and Microsoft, a white paper that explores how SAS and Microsoft have constructed integrations between SAS® Mannequin Supervisor and Microsoft Azure Machine Studying. Each are hubs for ModelOps processes and make it potential to deploy ModelOps with the advantage of streamlined workflow administration.